The Fast Pace of Innovation

As machine vision systems push toward higher resolutions and faster frame rates, the increase in image data volume is pushing the limits, and in some cases, outpacing the capabilities of traditional network configurations. As integrators continue to adopt more and more 10GigE and 25GigE cameras with high-throughput and complex vision processing, they may encounter packet loss which can lead to increased CPU load and inconsistent latency. Addressing these challenges often requires careful optimization or, in some cases, custom or proprietary solutions. But with the introduction of GigE Vision 3.0 and support for RDMA via the RoCE v2 standard, the industry is adopting a high-performance transport protocol that has already been proven in data centers and HPC (high-performance computing) environments. This article explores how the two standards, GigE Vision and RoCE v2, are converging to deliver more efficient, scalable, and future-proof machine vision networks.

GigE Vision and RoCE v2: Two Standards on Separate Paths

The GigE Vision standard was introduced in 2006 with the goal to unify how image data is transmitted over Ethernet in machine vision applications. It established a common framework based on UDP to ensure interoperability between cameras, software, and networking hardware. Over time, GigE Vision added features like packet retransmission and chunk data (GigE Vision 1.1, 2008), official support for link aggregation and 10 Gigabit, via IEEE 802.3ba, compression support, and non-streaming device control (GigE Vision 2.0, 2012), buffer and event handling improvements, more complex 3D data structure support (GigE Vision 2.1, 2018), and GenDC streaming and multi event data support (GigE Vision 2.2, 2022).

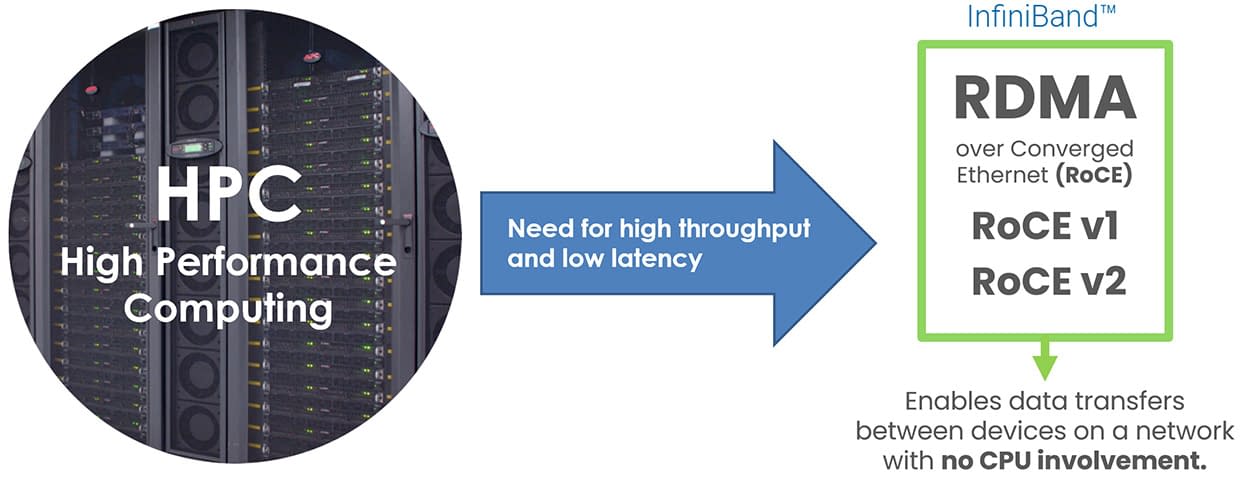

In parallel, the data center and HPC industries were solving a different problem: how to move large volumes of data between servers with minimal latency and CPU load. This led to the development of RDMA technologies and the introduction of RoCE (RDMA over Converged Ethernet). RoCE was developed by the InfiniBand Trade Association (IBTA) as a way to extend InfiniBand’s high-performance RDMA transport over standard Ethernet networks. RoCE v1 (2010) operated at Layer 2 and was limited to local network segments, but RoCE v2 (2014) expanded this by adding UDP/IP encapsulation, making it routable and scalable across standard Ethernet networks. Over the past decade, even though competing RDMA technologies existed, RoCE v2 matured and became widely adopted in cloud computing, storage, and real-time analytics.

1983

1993

1998

2002

Released

2010

Spec v1 Released

2000

2010

Linux Compatibility

2004

2018

Released

2014

2.5 / 5 Gbps

(NBase-T)

2016

400 Gbps

2017

2011

2012

2023 and Beyond...

Why Now: RDMA and GigE Vision Come Together

The idea of applying RDMA principles to Ethernet-based machine vision cameras was explored as early as 2016 by the GigE Vision standards committee. A proposal in 2017 within this committee outlined a new GVSP payload type designed for RDMA. While technically sound, the broader machine vision ecosystem was not yet ready. At the time, it wasn’t clear which RDMA technology would prevail as other competing RDMA technologies existed, including the iWarp protocol and Intel’s Omni-Path architecture. While RoCE v2 was gaining in popularity, RoCE v2 NICs were expensive, produced by only one single vendor, and required additional engineering effort to integrate into vision systems.

At the same time, most machine vision applications were still well served by 1GigE cameras, and for users requiring higher bandwidth, alternatives like USB 3.0, CameraLink, and CoaXPress were already in use. Demand for high-throughput Ethernet was still growing, but remained limited in scope. Early adopters of 10GigE used simpler vision processing with powerful host PCs, and with proper tuning, UDP-based data transfer was generally sufficient. As a result, many camera manufacturers did not commit to RDMA and were reluctant to invest in building and maintaining a full RDMA-compatible ecosystem. Without broad manufacturer support or strong demand from end users, efforts to incorporate RDMA into the GigE Vision standard were put on hold.

However, as time progressed, the demand for multi-10GigE camera applications grew to a level where RDMA had to be reassessed. More and more customers turned to Ethernet not just for speed, but also for its industrial reliability and network flexibility. Features like built-in electrical isolation, long cable lengths, Power over Ethernet (PoE), Precision Time Protocol (PTP), and action commands made Ethernet ideal for complex, scalable vision systems. Users wanted the industrial benefits of Ethernet with the bandwidth of 10GigE and beyond. However, as they began deploying multi-10GigE camera systems and implementing more advanced vision processing, many encountered high CPU load and reliability issues caused by packet loss and retransmissions over UDP. The increase in CPU resources needed to manage multiple 10GigE camera data streams made it clear that the time had come once again to re-evaluate RDMA for the GigE Vision standard.

Meanwhile, the situation also evolved for RoCE v2 RDMA. Thanks to its UDP encapsulation, adoption of RoCE v2 grew exponentially. With the release of Broadcom’s BCM57414 and BCM957414 chipsets, RDMA NICs also became more accessible and affordable, with NIC prices matching non-RDMA NICs. Operating system support had matured on both Windows and Linux, and the RoCE v2 protocol became well understood and widely deployed, beating out competing RDMA technologies. Because of these reasons, the GigE Vision standards committee moved forward with integrating RoCE v2 RDMA into the specification as an optional transport protocol. While the original UDP transport protocol remains supported, RDMA offers a standards-compliant alternative for systems requiring higher bandwidth needs without utilizing CPU resources for managing the data streams.

How RoCE v2 RDMA Reduce CPU Load in Vision Systems

In a conventional UDP-based system, each camera transmits image data to the host, where the CPU handles packet assembly, data validation, packet retransmission, and memory copying into application buffers. RDMA provides a more efficient alternative. Using RoCE v2, data is transferred directly from the camera’s memory buffer to PC memory, bypassing the kernel and CPU. RDMA pins PC memory by registering it with the host’s RDMA-capable network interface card (NIC), also known as a Host Channel Adapter (HCA). This ensures camera data stays in physical RAM and isn’t moved by the OS. The NIC is then given permission to access that memory directly to manage the data stream, including applying flow control and packet retransmission.

Each RDMA connection uses a queue pair structure, with each pair consisting of a send queue and a receive queue. There is also a completion queue that tracks the status of each transfer, allowing the application to detect when data is ready for processing. These queues are managed in hardware by the host’s RDMA NIC. Because transfers occur entirely in the camera and NIC hardware, the CPU is not involved in the managing the data transfer. This mechanism is called zero-copy transfer. This significantly reduces system overhead, improves determinism, and frees up CPU resources for vision processing, user interface handling, or control logic.

Developer APIs and Software Support for RDMA

To implement RDMA communication, developers use low-level programming APIs known as the verbs. On Linux, the libibverbs library provides access to these verbs in user space. On Windows, Microsoft offers the Network Direct Service Provider Interface. These libraries give software and camera manufacturers access to the RDMA hardware features required for high-performance streaming. For example, they allow RDMA streaming support to be integrated into LUCID’s Arena SDK, in ArenaView GUI and APIs, as well as other GigE Vision compliant software.

How are RDMA devices programmed in applications? It starts with RDMA verbs, which are the low-level building blocks for RDMA applications.

These APIs provide the functionality needed to register and pin memory, create and manage queue pairs, and post data transfer operations. They are essential for enabling zero-copy data movement through RDMA NICs. However, while these APIs make RDMA communication possible, they do not specify how RDMA should be structured or managed within a machine vision application.

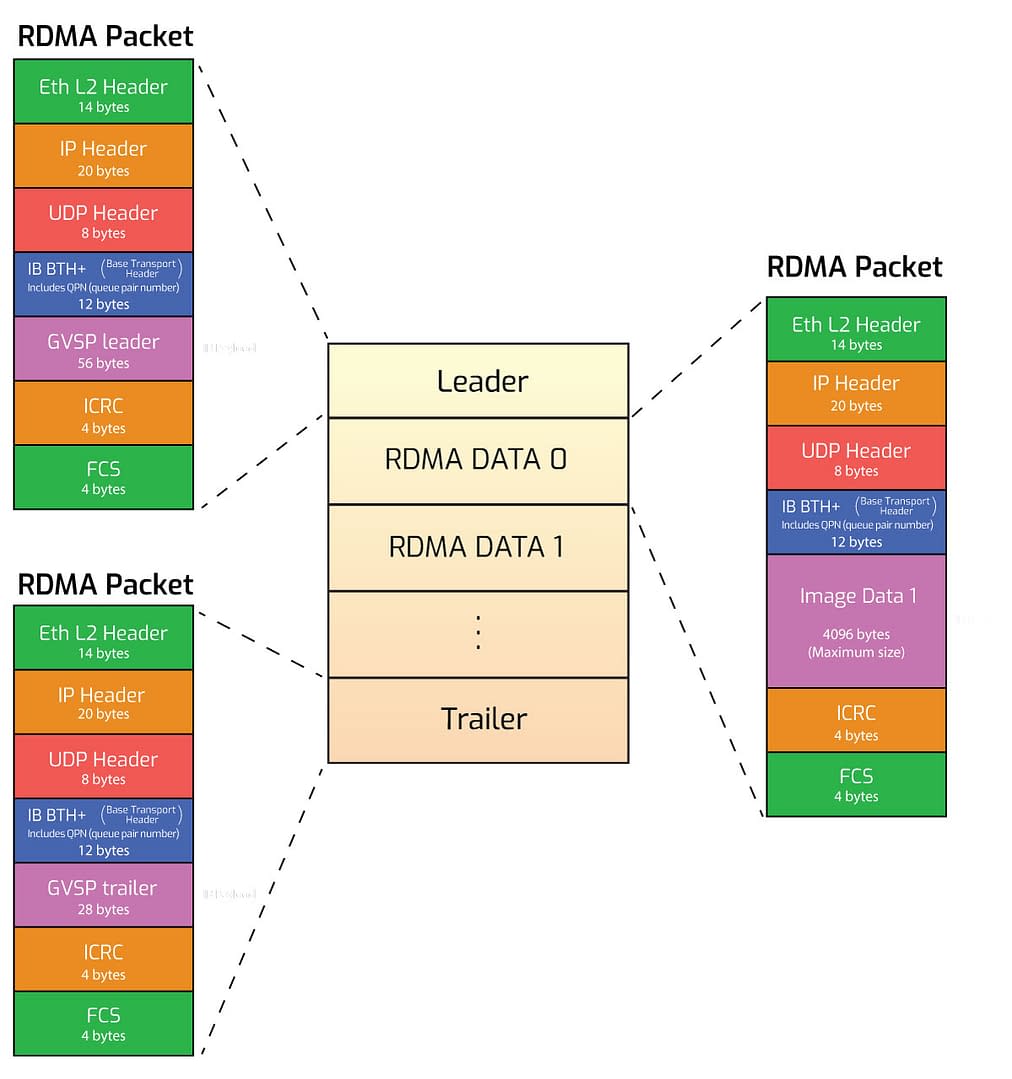

This is where the GigE Vision 3.0 standard comes in. This standard defines how RDMA-based image streams should be set up, operated, and terminated. It also specifies the device registers and control nodes that are required, how memory resources are allocated, and the packet formats used to transmit image data. This allows for consistent and predictable camera performance and interoperability across different implementations.

Standardizing how RDMA is used within the GigE Vision 3.0 standard provides many benefits for system integrators, with the greatest benefit being that of hardware and software flexibility when adopting RDMA. System integrators are not locked into a single hardware manufacturer’s ecosystem and can instead build systems using standards-compliant components that are widely supported. Also, because RoCE v2 operates over routable IP networks, it also works with common Ethernet infrastructure and existing switches, further simplifying deployment.

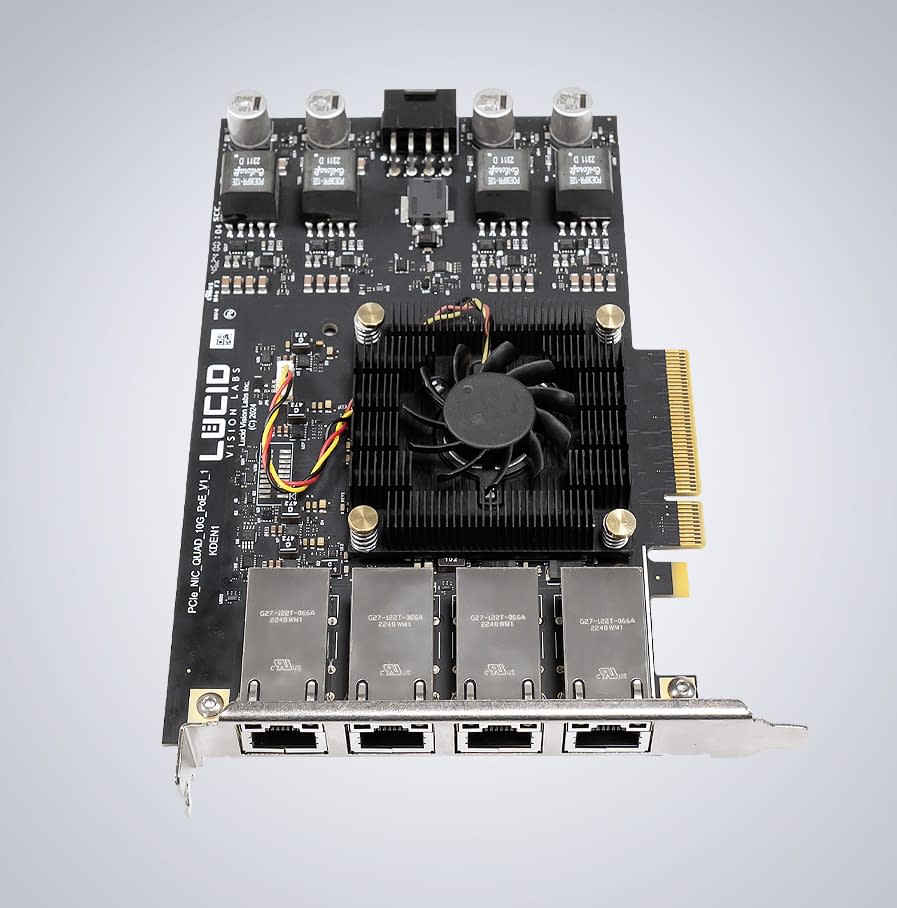

LUCID Vision Labs has implemented RDMA support for its 10GigE cameras, the Atlas10 and Triton10 camera families, with official support for GigE Vision 3.0 coming when the standard is ratified. When paired with an RDMA NIC, such as LUCID’s low-cost 4- and 2-port 10GigE PoE cards, image data can be delivered directly into host memory. This capability is especially valuable for applications requiring real-time inference or high-frame-rate image processing, such as robotic guidance or factory and manufacturing inspection. By eliminating the CPU from the data path, developers can achieve consistent throughput and low-latency operation even under sustained load from multiple cameras.

RDMA for GigE Vision - Built for the Future, Ready for Today

The integration of RDMA via RoCE v2 into the GigE Vision 3.0 standard marks a significant advancement for high-performance machine vision systems. While the technical foundations for RDMA have existed for years, only recently has the surrounding ecosystem matured enough to make this technology practical and cost-effective in industrial imaging environments.

For users, the value lies not just in performance, but in standardization. GigE Vision 3.0 defines how RDMA should be used across devices and platforms, while RoCE v2 provides a reliable, IP-routable transport that has already been proven at scale in data centers, cloud infrastructure, and HPC systems. This means the protocols involved are no longer experimental or proprietary. They are well-defined, widely implemented, and thoroughly validated under demanding real-world conditions. With the addition of RDMA into the GigE Vision standard, users are assured that hardware and software from different vendors will work together reliably, reducing integration time and cost. It also ensures long-term support, ease of scalability, and a wider range of compatible tools and components.

Support Center

Support Center